NIST AI RMF Playbook Explained: How to Use the AI Risk Management Framework Effectively

Learn how to apply the NIST AI RMF and Playbook to reduce AI risks like bias, privacy issues, and transparency gaps, while still encouraging innovation.

According to a recent study, artificial intelligence is projected to contribute as much as $15.7 trillion to the global economy by 2030. This staggering figure underscores both the immense value and growing influence of AI across industries.

While AI has tremendous potential, especially when it comes to identifying risks, there are a large number of concerns that surround AI development . The most common concerns are bias in automated decision-making, lack of transparency, security and privacy vulnerabilities, and ethical or regulatory gaps. These emerging AI risks are not theoretical; they are things that have happened and have affected real industries.

To manage these challenges without stalling innovation, organizations need a clear, flexible, and actionable approach to AI risk. That’s where the NIST AI Risk Management Framework (AI RMF) and its companion Playbook come into play. In this post, we’ll explain what both tools are, how they differ, and how your organization can use them together to build trustworthy, responsible AI systems.

What is the AI RMF and Why Does It Matter?

Before we dive deeper into AI RMF, let's do a brief overview of what it is. AI RMF stands for Artificial Intelligence Risk Management Framework. It is a structured, flexible guide developed to help organizations manage the risks that come with the use of AI systems.

In simple terms, the AI RMF is about managing the risks of AI, not using AI to manage risk. It provides a shared approach to understanding and reducing issues like, bias, lack of transparency, and security vulnerabilities, while still supporting innovations.

The AI risk management framework was developed by the National Institute of Standards and Technology (NIST). NIST is a widely known and followed leader in cybersecurity and technology standards. The goal was to create a resource that could be used across sectors, both public and private, to ensure AI is being developed and used responsibly.

The NIST AI RMF is crucial for organizations because it emphasizes flexibility and adaptability.

Rather than being a strict checklist, it's designed to evolve alongside AI technologies and to be tailored to different levels of maturity. This means if an organization is developing a new chatbot they can apply the RMF the same way as a hospital utilizing AI-assisted diagnostics.

AI RMF’s importance relies on its ability to bridge the gap between safety and innovation. It does not aim to slow down adaptation; it exists to make sure that adaptation is done with oversight and responsible AI practices.

What Is the NIST AI RMF Playbook?

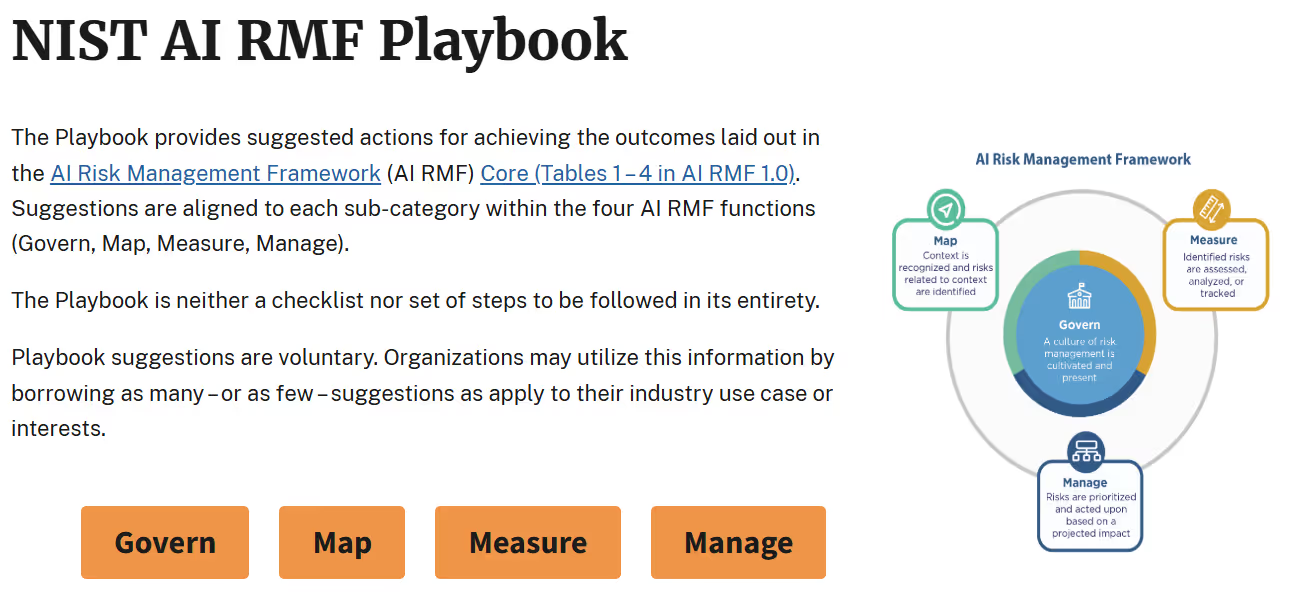

While the AI RMF sets the foundation for managing AI risks, the NIST AI RMF Playbook serves as a practical guide to help organizations implement the framework in real-world environments. The Playbook builds on AI RMF 1.0 and offers actionable guidance, references, and best practices to help organizations integrate trustworthy AI principles into their workflows.

The Playbook is voluntary and customizable, making it ideal for organizations at any maturity level.

Whether you're designing a new AI model or maintaining an existing one, the Playbook provides step-by-step suggestions aligned with the four key functions of the AI RMF:

- Govern: Establish oversight and accountability structures for AI development and use.

- Map: Understand and document the context, goals, and risks of your AI system.

- Measure: Identify metrics and indicators that assess whether your AI aligns with trustworthy goals.

- Manage: Respond to identified risks, monitor ongoing performance, and improve over time.

Unlike a one-size-fits-all checklist, the Playbook allows you to filter and tailor the information based on your industry, risk tolerance, and organizational needs. It’s designed to grow alongside your AI program and can be adjusted as your system evolves or as new guidance becomes available.

Why The Playbook Matters

The value of the Playbook lies in its ability to operationalize the AI RMF. Many organizations struggle with turning high-level principles into concrete steps. The Playbook bridges this gap by offering:

- Real-world examples and best practices

- Suggested actions for each RMF function

- Flexibility to pick and choose relevant portions

- Links to external resources and research

Because the Playbook is still evolving, public feedback is encouraged. NIST accepts ongoing input and periodically updates the Playbook based on community suggestions. This ensures the resource remains relevant, practical, and informed by the latest AI risk trends.

Implementing the NIST AI RMF in Your Organization

As mentioned earlier in this blog, the AI RMF is organized around four core functions; Govern, Map Measure, Manage.

Although this seems linear, the reality of it is that AI Risk Management is more circular in nature. The image from the previous section even has a circle on it. In other words, where you start depends on what's best for your organization. As a result, we've taken that approach below and instead of going the linear route, we've retooled the approach to show what we mean by "circular" by working through it this way; Map, Measure, Manage, Govern.

- Map: Understand and document the context and risks of AI systems

- Measure: Evaluate the effectiveness and impact of AI

- Manage: Take action to address and reduce identified risks

- Govern: Establish oversight and accountability structures

Each function includes categories and subcategories to guide deeper implementation. The Playbook supports each of these functions with implementation tips and real-world references.

Map: Understand the Risks and Context

Before deploying or even developing an AI system, organizations need to clearly understand what the AI is doing, how it operates, and what risks may arise.

Mapping includes:

- Defining the intended use of the system

- Identifying impacted stakeholders

- Recognizing potential areas for harm or bias

The Playbook recommends using contextual analysis tools and stakeholder mapping exercises during this phase. This helps surface risks early and ensures your use case aligns with organizational values.

Measure: Assess AI Performance and Risk

Once risks are mapped, it’s time to quantify and evaluate them. This includes:

- Setting performance and fairness benchmarks

- Evaluating data quality

- Using tools to understand model behavior

The Playbook offers resources to help organizations define metrics for transparency, accuracy, robustness, and fairness, and suggests ongoing evaluation techniques to detect emerging risks.

Manage: Mitigate Risks Proactively

With risks identified and measured, the next step is to reduce or eliminate them through technical, procedural, or human-centered interventions.

Risk management strategies might include:

- Updating datasets to remove bias

- Switching or retraining algorithms

- Introducing human review steps

The Playbook offers risk mitigation strategies based on best practices from industry and academia to help guide this phase.

Govern: Build Oversight Structures and Policies

Governance ensures AI is not only effective, but accountable. It involves:

- Assigning roles and responsibilities for AI oversight

- Establishing clear communication and reporting procedures

- Aligning policies with legal and ethical standards

The Playbook emphasizes the importance of creating AI oversight committees, setting up internal audit mechanisms, and defining escalation paths if things go wrong.

How to Ensure Trustworthy AI Systems?

Successfully implementing the NIST AI RMF is only part of the equation. To truly optimize AI's value, organizations must focus on building and maintaining trust in their AI systems, not just technically but also ethically. So how can organizations ensure their AI systems are trustworthy?

Transparency

AI systems need to be clear and understandable to any users, and regulators to ensure everyone can see how decisions are made. Transparency helps build confidence by showing why decisions were made. Without clear transparency, it could cause users to distrust the AI’s answers.

Organizations can support transparency by utilizing explainability tools that interpret how AI models reach their decisions, such as key data points or offering plain-language summaries. Maintaining detailed documentation about sources, design, and limitations also supports additional transparency. These practices can help ensure everyone can evaluate and trust AI outputs.

Fairness

For users to trust AI systems, those systems must be designed to avoid bias and discrimination, ensuring equal treatment for all users. When AI exhibits bias or unfair behavior, it can cause real harm, undermining public trust and discouraging use of the systems.

Organizations can ensure fairness by regularly conducting audits of data to detect any biases. If any biases are identified, it's critical to address and resolve it promptly. Proactive mitigation not only protects users but also reinforces ethical AI practices.

Security & Privacy

AI systems need to protect sensitive data and guard against any malicious attacks. A single data breach can cause major damage. These attacks not only compromise user information but also damage the organization's reputation and trustworthiness.

To prevent any breaches, organizations need to implement strong encryption and access controls to protect all user data. In addition to technical safeguards, organizations also must ensure they are remaining compliant with private laws, think HIPAA or GDPR.

Accountability

Things will go wrong, but when things do, there needs to be a clear line of communication to help investigate what happened and the next steps. Depending on the error, the response will vary. If it’s something serious, organizations may need to report the error to a government organization.

Conclusion

As AI becomes a more powerful and integrated part of business and government, it also presents new layers of risk. The NIST AI RMF gives organizations a framework to manage those risks thoughtfully. The AI RMF Playbook gives them the tools to take action.

Together, these resources help organizations build AI systems that are not just effective, but trustworthy, secure, and fair. Whether you're just beginning your AI journey or refining mature processes, the RMF and Playbook offer scalable guidance that evolves alongside your innovation.

Emphasize your product's unique features or benefits to differentiate it from competitors

In nec dictum adipiscing pharetra enim etiam scelerisque dolor purus ipsum egestas cursus vulputate arcu egestas ut eu sed mollis consectetur mattis pharetra curabitur et maecenas in mattis fames consectetur ipsum quis risus mauris aliquam ornare nisl purus at ipsum nulla accumsan consectetur vestibulum suspendisse aliquam condimentum scelerisque lacinia pellentesque vestibulum condimentum turpis ligula pharetra dictum sapien facilisis sapien at sagittis et cursus congue.

- Pharetra curabitur et maecenas in mattis fames consectetur ipsum quis risus.

- Justo urna nisi auctor consequat consectetur dolor lectus blandit.

- Eget egestas volutpat lacinia vestibulum vitae mattis hendrerit.

- Ornare elit odio tellus orci bibendum dictum id sem congue enim amet diam.

Incorporate statistics or specific numbers to highlight the effectiveness or popularity of your offering

Convallis pellentesque ullamcorper sapien sed tristique fermentum proin amet quam tincidunt feugiat vitae neque quisque odio ut pellentesque ac mauris eget lectus. Pretium arcu turpis lacus sapien sit at eu sapien duis magna nunc nibh nam non ut nibh ultrices ultrices elementum egestas enim nisl sed cursus pellentesque sit dignissim enim euismod sit et convallis sed pelis viverra quam at nisl sit pharetra enim nisl nec vestibulum posuere in volutpat sed blandit neque risus.

Use time-sensitive language to encourage immediate action, such as "Limited Time Offer

Feugiat vitae neque quisque odio ut pellentesque ac mauris eget lectus. Pretium arcu turpis lacus sapien sit at eu sapien duis magna nunc nibh nam non ut nibh ultrices ultrices elementum egestas enim nisl sed cursus pellentesque sit dignissim enim euismod sit et convallis sed pelis viverra quam at nisl sit pharetra enim nisl nec vestibulum posuere in volutpat sed blandit neque risus.

- Pharetra curabitur et maecenas in mattis fames consectetur ipsum quis risus.

- Justo urna nisi auctor consequat consectetur dolor lectus blandit.

- Eget egestas volutpat lacinia vestibulum vitae mattis hendrerit.

- Ornare elit odio tellus orci bibendum dictum id sem congue enim amet diam.

Address customer pain points directly by showing how your product solves their problems

Feugiat vitae neque quisque odio ut pellentesque ac mauris eget lectus. Pretium arcu turpis lacus sapien sit at eu sapien duis magna nunc nibh nam non ut nibh ultrices ultrices elementum egestas enim nisl sed cursus pellentesque sit dignissim enim euismod sit et convallis sed pelis viverra quam at nisl sit pharetra enim nisl nec vestibulum posuere in volutpat sed blandit neque risus.

Vel etiam vel amet aenean eget in habitasse nunc duis tellus sem turpis risus aliquam ac volutpat tellus eu faucibus ullamcorper.

Tailor titles to your ideal customer segment using phrases like "Designed for Busy Professionals

Sed pretium id nibh id sit felis vitae volutpat volutpat adipiscing at sodales neque lectus mi phasellus commodo at elit suspendisse ornare faucibus lectus purus viverra in nec aliquet commodo et sed sed nisi tempor mi pellentesque arcu viverra pretium duis enim vulputate dignissim etiam ultrices vitae neque urna proin nibh diam turpis augue lacus.