Cyber AB September Town Hall: 7 Key Takeaways

There was a lot covered during this meeting so buckle up. Here are our key takeaways for the September Town Hall.

The CyberAB held its monthly virtual Town Hall meeting on September 27, 2022. Guest speaker, Nick DelRosso, from the Defense Industrial Base Cybersecurity Assessment Center (DIBCAC) presented findings from the most recent medium assessments.

There was a lot covered during this meeting so buckle up. Here are our key takeaways for the September Town Hall…

Progress of the Joint Surveillance Program

Since late this summer, authorized CMMC 3rd Party Assessor Organizations (C3PAOs) have been working alongside the DIBCAC to conduct voluntary assessments. The assessment process has been following the existing process DIBCAC High Assurance assessments, not the recently released draft CMMC Assessment Process (CAP).

According to Nick, the Joint Surveillance Program has completed its first three assessment. But they are still working towards entering the information into the Supplier Performance Risk System (SPRS). When asked if he could provide updates on the outcome of the assessments, he declined. The completion of only three at this point and a desire by all parties to remain anonymous.

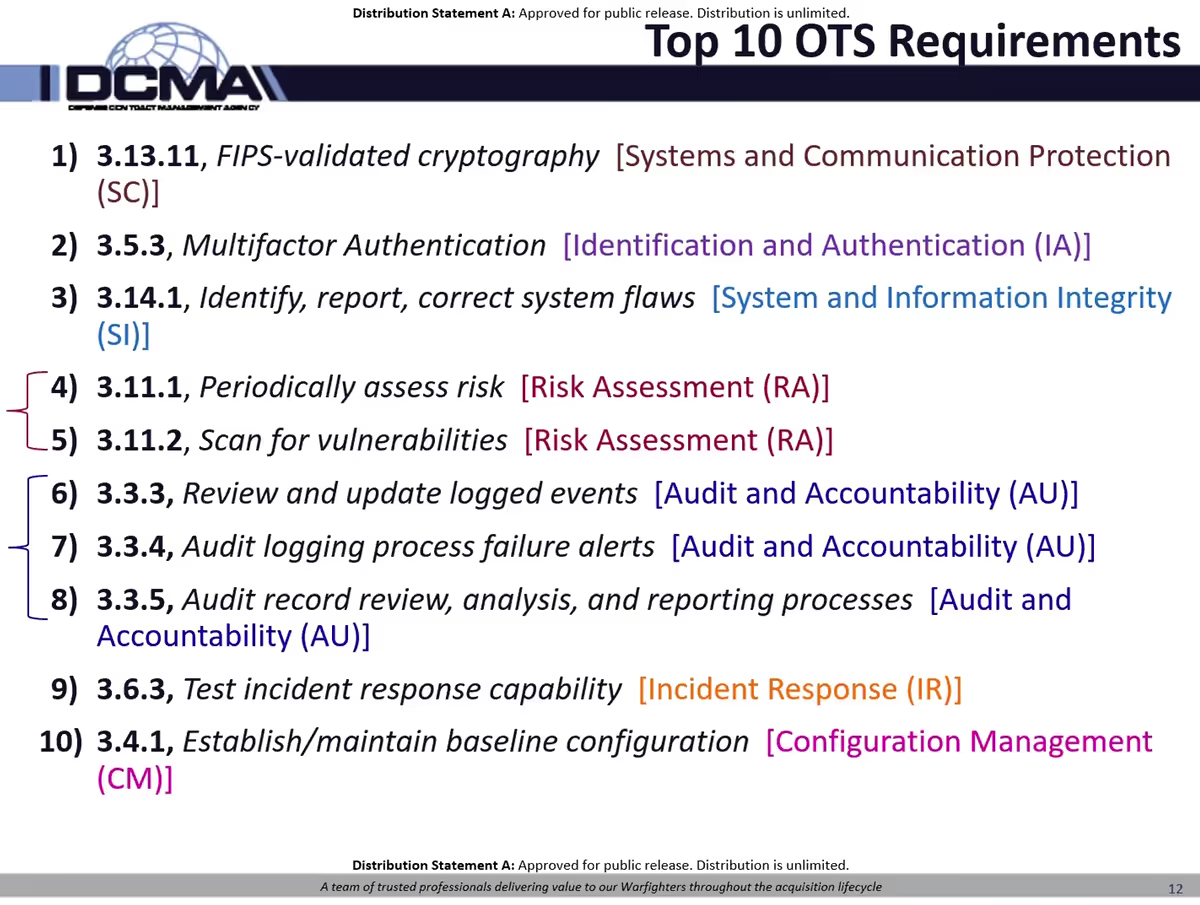

Top 10 OTS Requirements

When it comes to practices assessed as other than satisfied (OTS), the DIBCAC has found 10 common practices that organizations struggle with. Nick emphasized that number one is FIPS-validated cryptography and number two is multi-factor authentication.

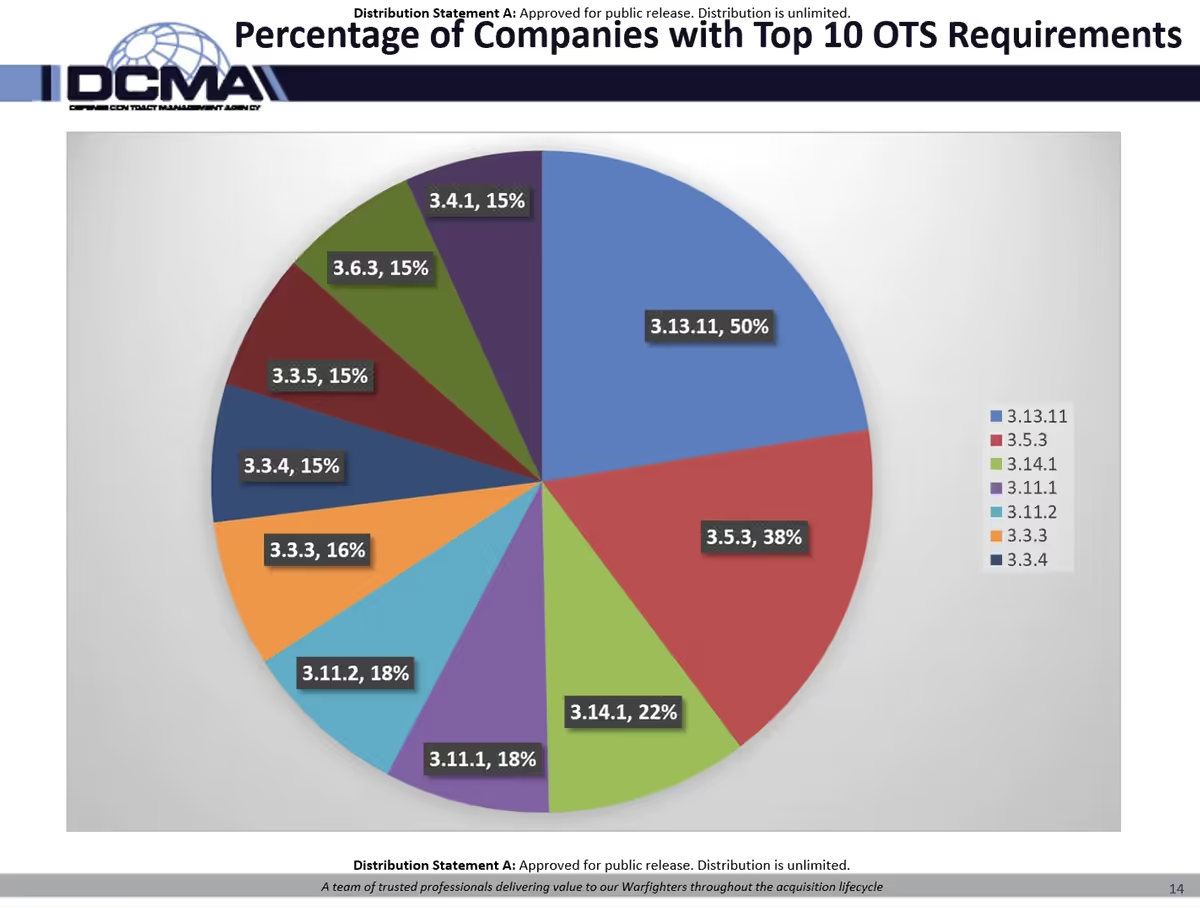

Nick provided a pie chart showing a breakdown of the top 10 most common practices not met. The two most common other-than-satisfied (OTS) practices accounted for 12%. The remaining top ten accounted for 17%. The other 100 practices accounted for 71%.

Nick produced a second pie chart to elaborate on the FIPS-validated cryptography practice. Fifty percent of companies with at least one of the Top 10 OTS practices failed to meet practice 3.13.11.

Patches and updates often cause FIPS validation to fall out of compliance. DIBCAC prefers a temporary deficiency over not patching or updating the system. Temporary deficiencies don’t impact the score if the practice was recently compliant.

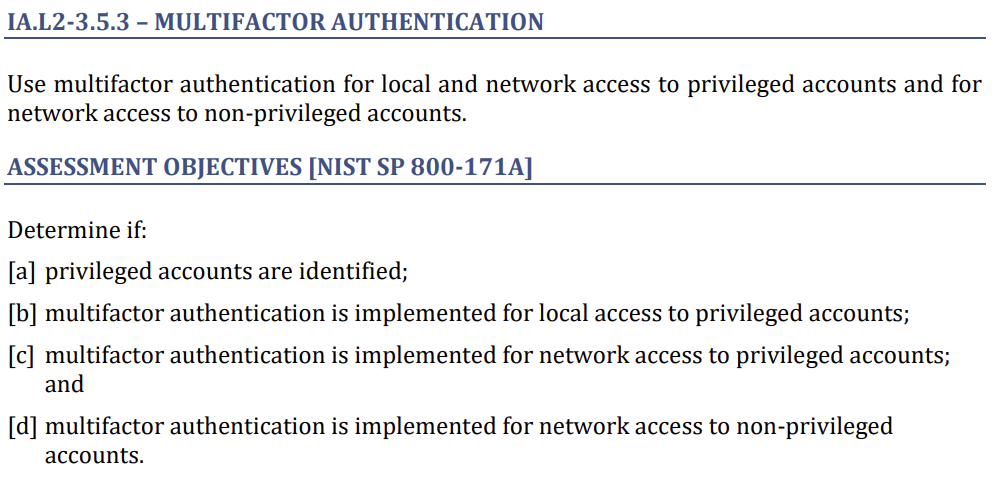

There’s less flexibility in scoring for multi-factor authentication (MFA). There’s also a misperception that this practice only applies when connecting to an outside system. This practice, according to Nick, applies to any network access. Meaning any connection to any system outside of the box you’re working in requires MFA.

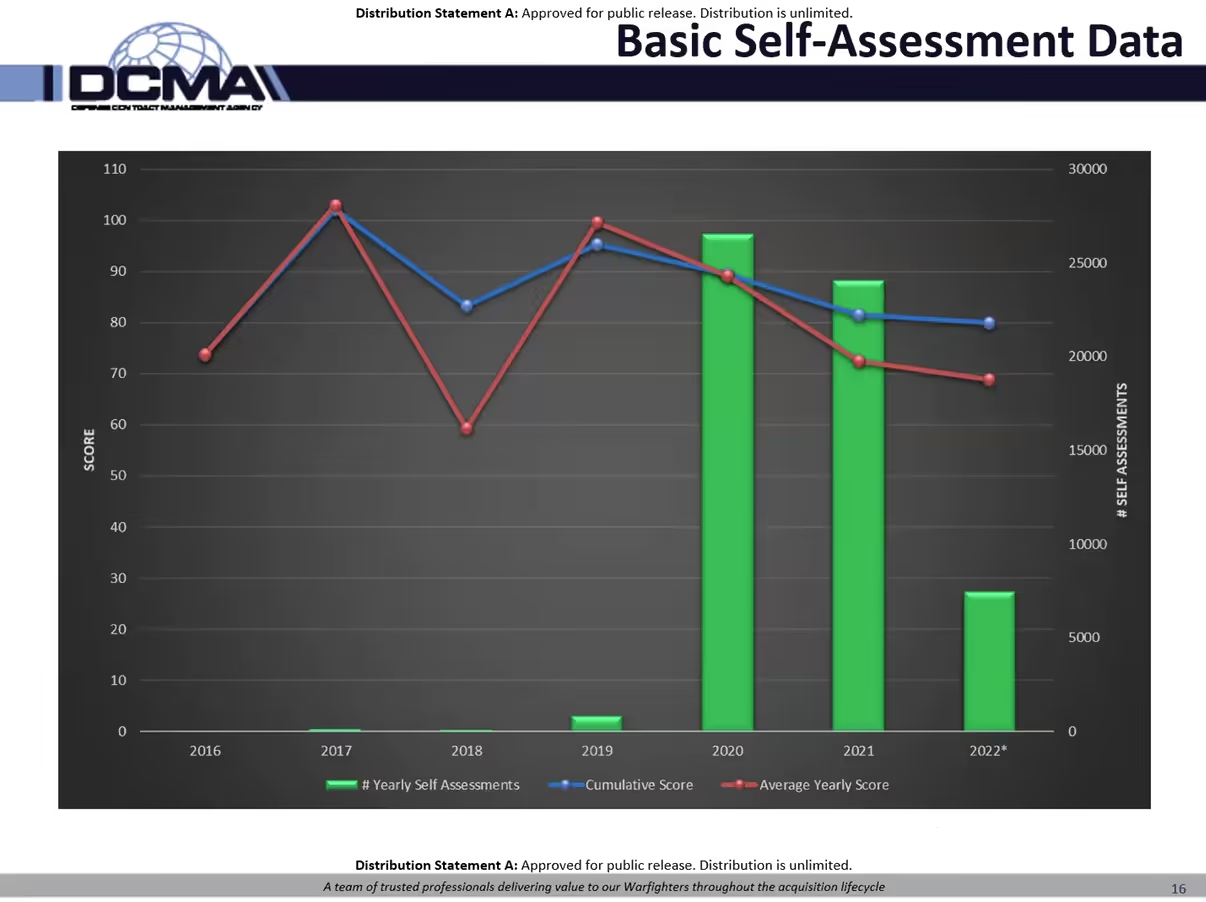

Basic Self-Assessment Data

Nick also provided insight into self-assessment scores over the past few years. In 2019, the average self-assessment score was between 90 and 100 points. As more companies started reporting, the average scores started declining.

The DIBCAC team wasn’t surprised by this trend. Early adopters of self-assessments may have paid more attention to the requirements. As the requirements became applicable to all companies in the defense industrial base (DIB) handling controlled unclassified information (CUI), the average scores have fallen. The data presented in the combined chart is representative through July 2022.

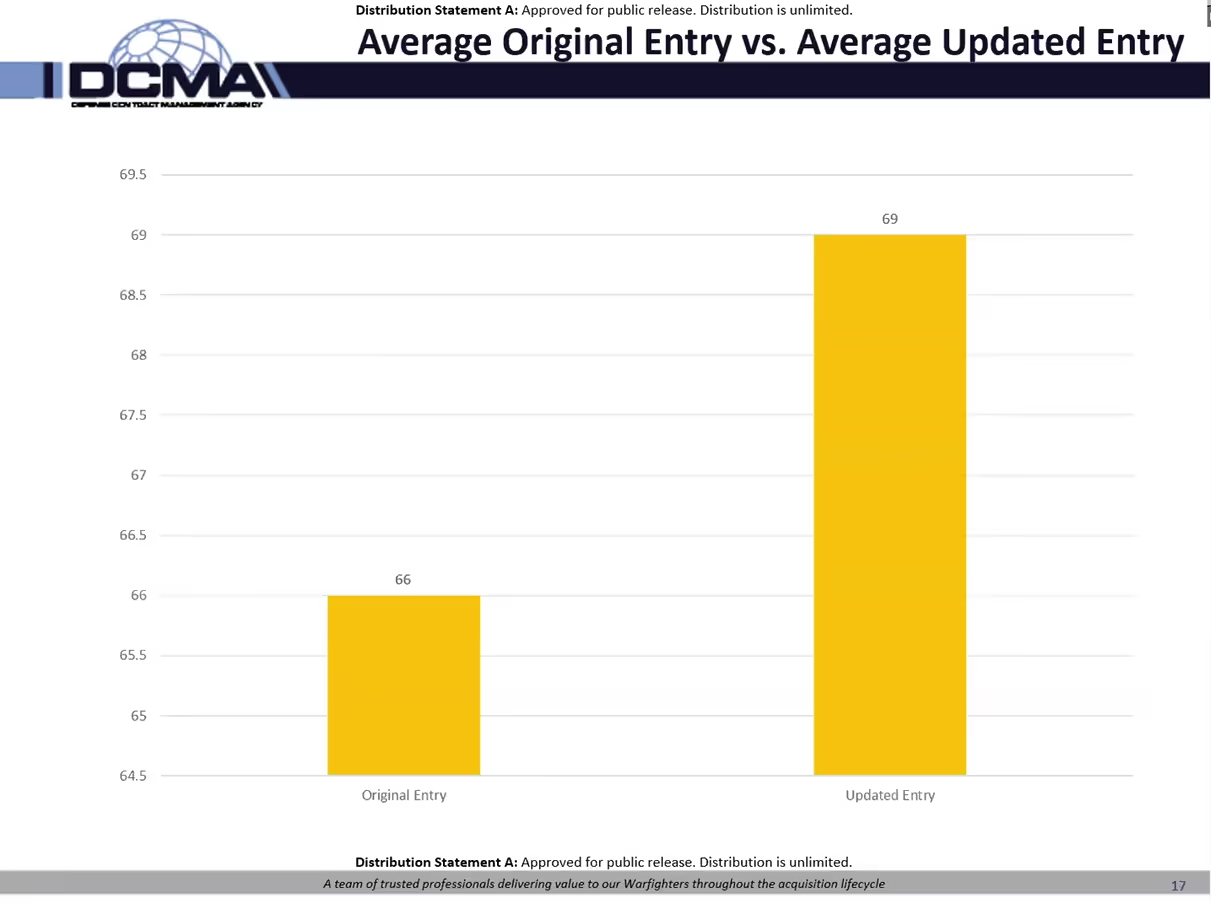

DIBCAC expects scores to rise in 2023 as companies that were first to report in 2020 will report again. The existing requirements state contractors must have a SPRS score no older than 3 years old. There’s no prohibition from updating those scores with greater frequency. DIBCAC has seen a noticeable increase in average updated entries for original entries.

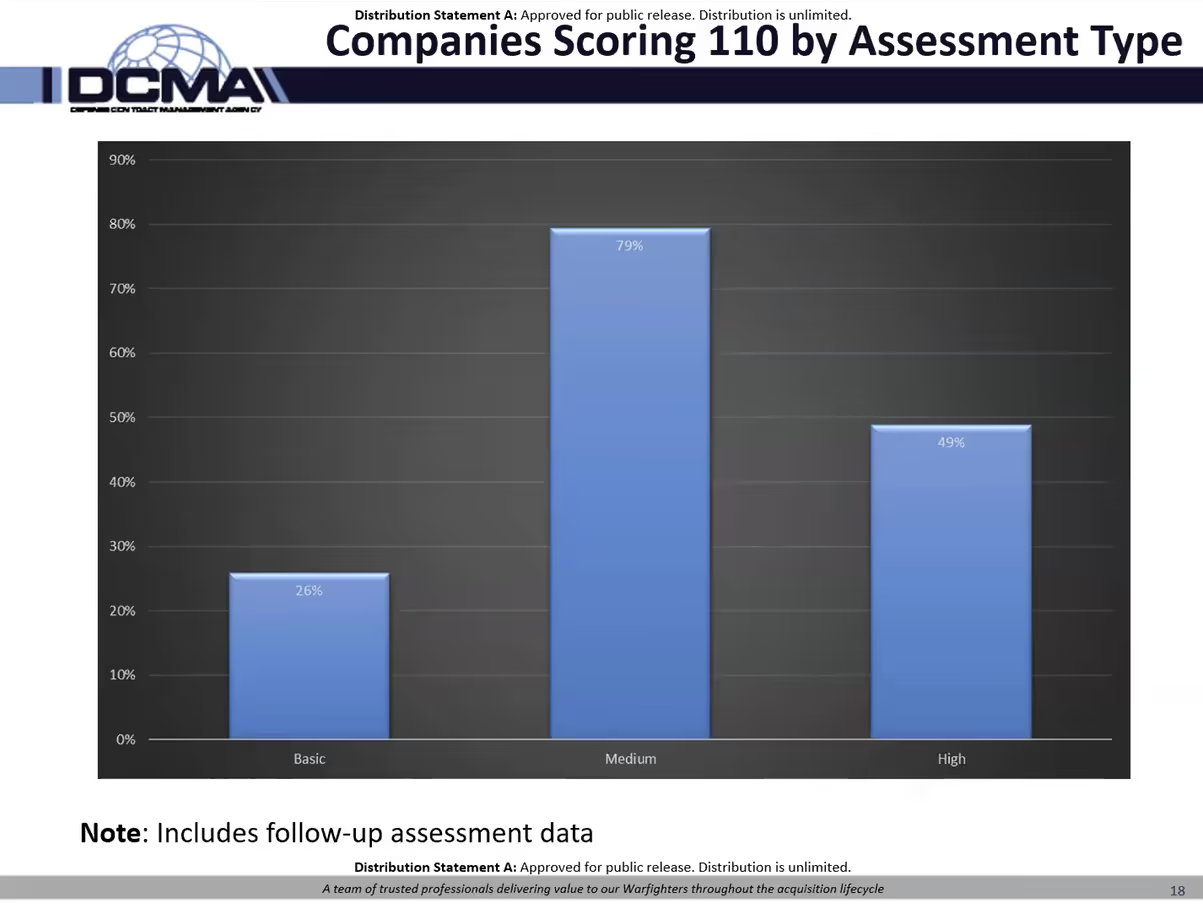

Companies Scoring 110 by Assessment Type

Nick provided a breakdown of the percentage of perfect scores by assessment type. As a reminder, the three types of DIBCAC assessments include:

- Basic - a self-assessment completed by the contractor

- Medium - involves the DIBCAC reviewing a company’s system security plan (SSP)

- High - involves DIBCAC on-site verification, examination and demonstration

Contractors entering basic assessment scores don't update their scores after completing their plan of action and milestones (POA&M). DIBCAC follows up on the POA&M with Medium and High assessments. This feedback mechanism may contribute towards higher scores compared to basic assessments.

Medium Assessment Studies

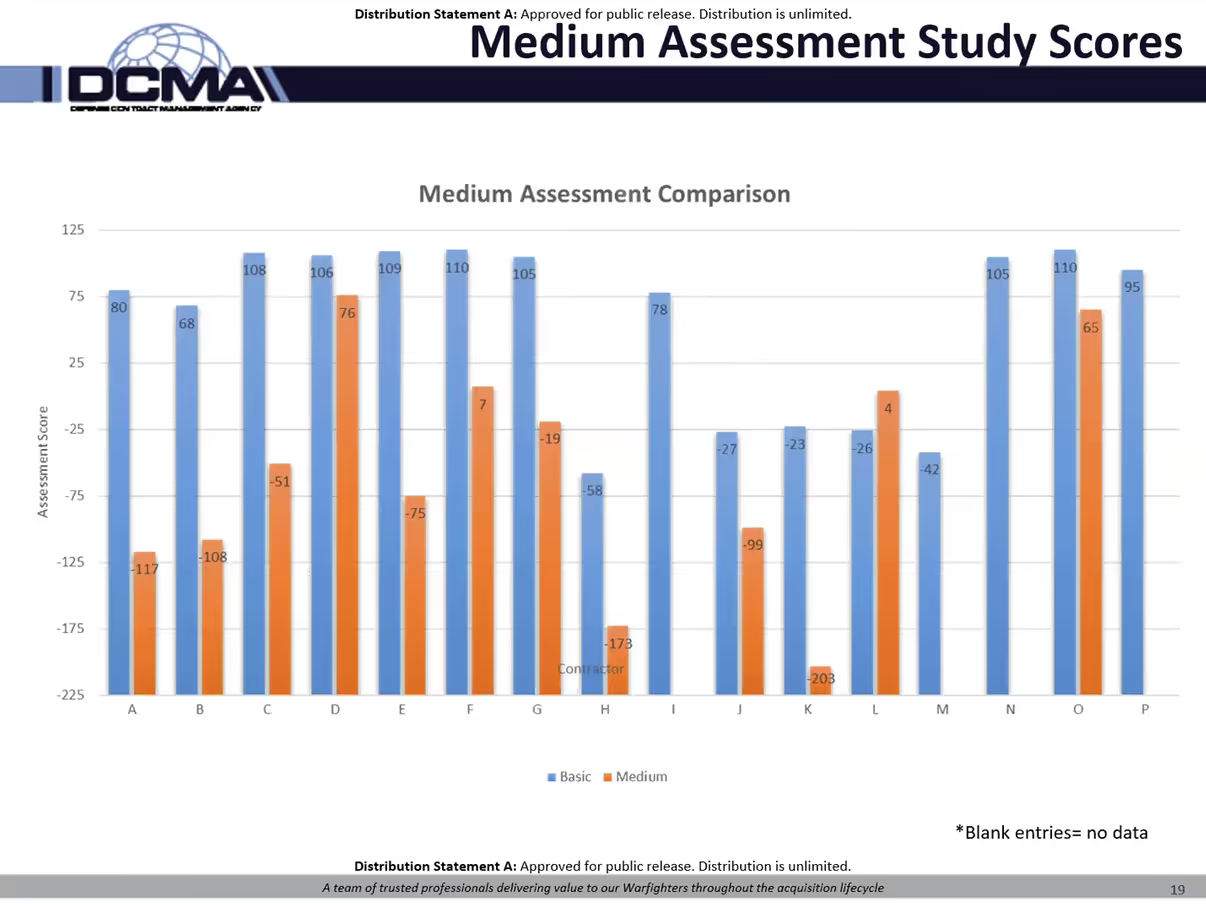

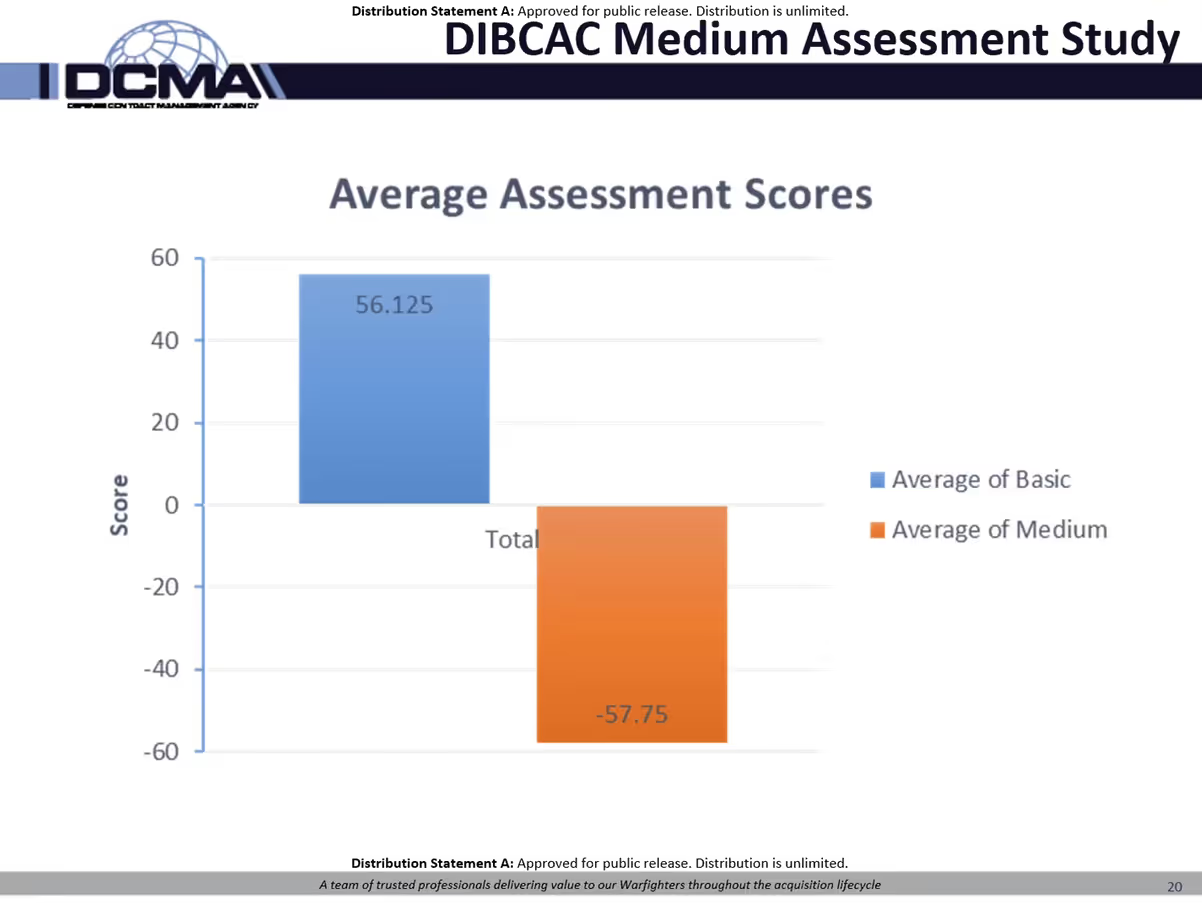

The most interesting slide came about eighteen minutes into the September Town Hall. Nick presented a comparison between 16 Basic Assessment and Medium Assessment scores.

DIBCAC conducts Medium Assessments by reviewing the System Security Plan (SSP). The SSP needs to document how the organization implements the control. Restating the requirements or affirming they meet them is not enough.

The average Basic Assessment score was 56.125 points but the average Medium score was –57.75. A score of 110 would represent a perfect score and -203 is the worst possible score.

DIBACCO's parent is The Defense Contract Management Agency (DCMA). In March of 2022, they announced they would start conducting medium assessments. As reported by FCW, the goal is to better understand "the level of compliance... with smaller companies who compose the majority of the DIB".

Many articles help spread the word that DIBCAC was conducting medium assessments. Then, DIBCAC noticed many companies were updating their assessments with lower scores. This prompted a more formal evaluation. They compared scores from before and after the March announcement.

They found more than 150 scores had declined by more than 100 points. The most notable was a contract that went from having a perfect score to the worst possible score. They also found entries with a significant increase in scores. Nick didn’t elaborate on how many or what change in the score they deemed significant.

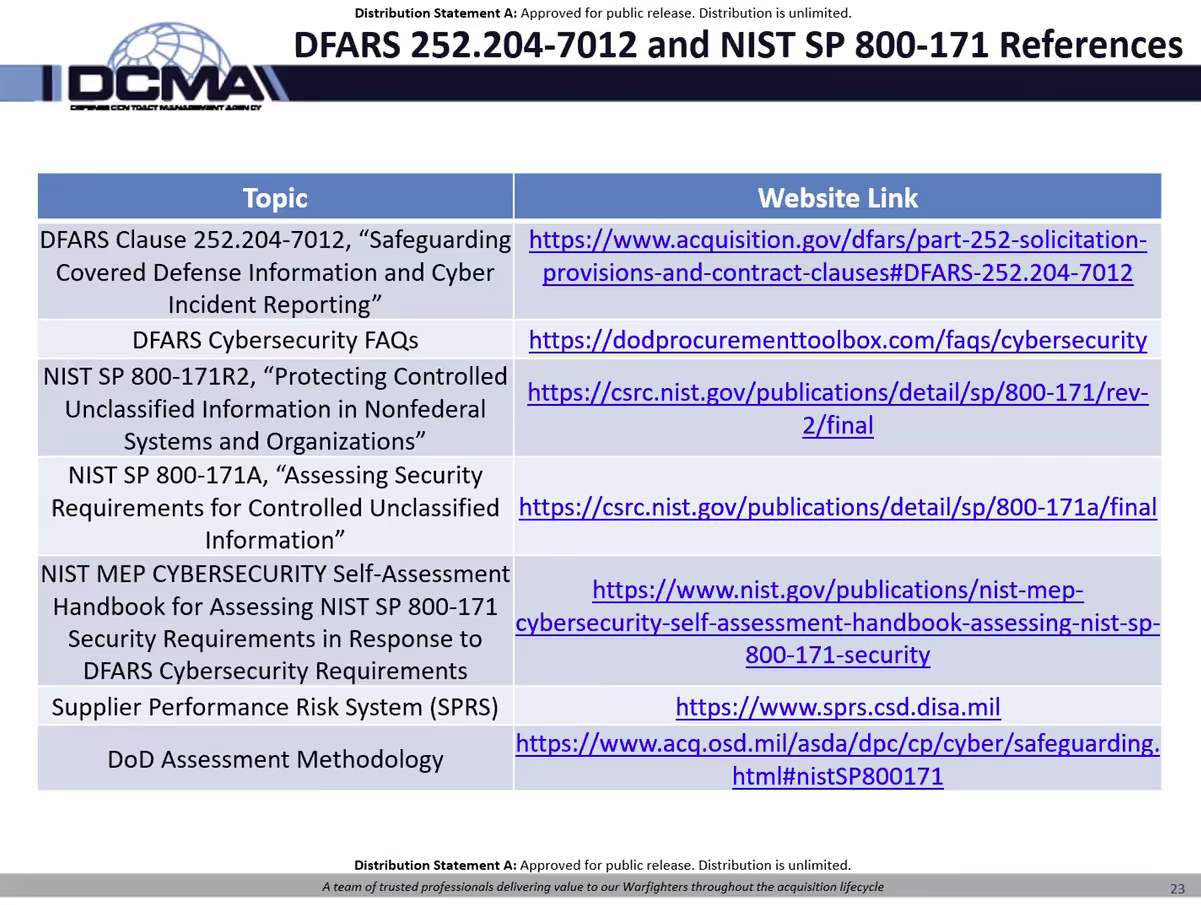

DIBCAC will continue the medium assessment study to better understand what is driving the shift to lower self-assessed scores. One hypothesis is a lack of understanding of the existing requirements. To this effect, Nick provided a full list of references organizations can use to better understand the existing requirements.

One notable update to this list is the withdrawal of the MEP Cybersecurity Self-Assessment Handbook for Assessing SP 800-71. The National Institute for Standards and Technology (NIST) has withdrawn this publication and replaced it with Special Publication 800-171A.

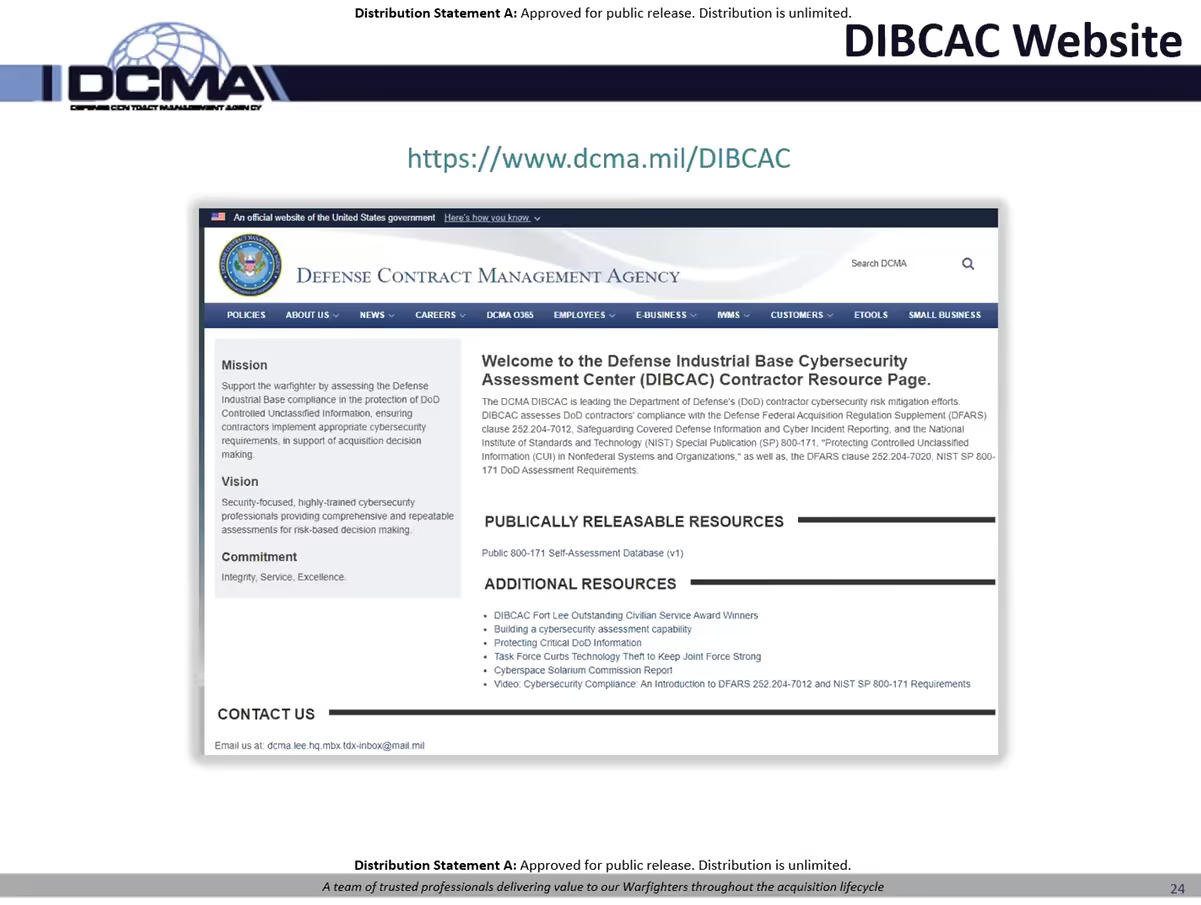

On the subject of resources, Nick introduced an updated landing page for the DIBCAC within the Defense Contractor Management Agency (DCMA) website. On the main page is their Public 800-171 Self-Assessment Database (v1) which is the same as what their assessors use.

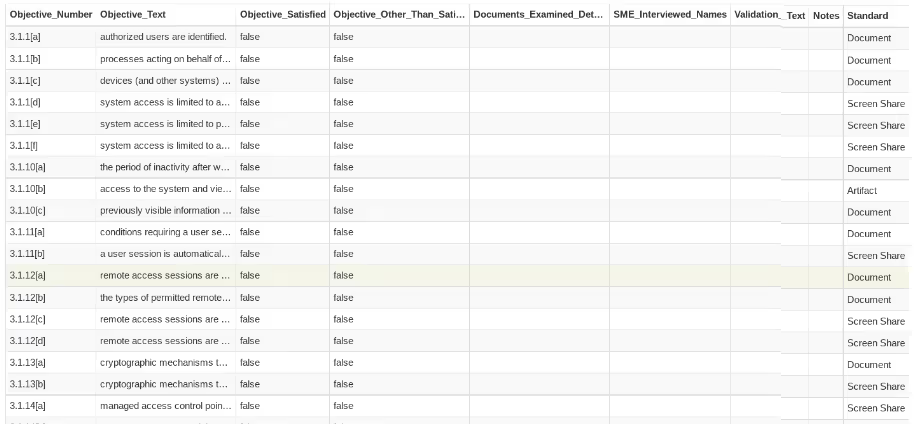

In looking at this ACCDB (database) file, there are three tables (Family Names, Objectives and Requirements) and two tables linking Requirements to Family Names and Requirements to Objectives.

We presume the workflow would begin with marking the objective table as “true” if satisfied or "false" if it is other than satisfied. There are also columns representing documents and persons interviewed. This leads us to believe the last column entitled Validation_Text may relate to observations made or tests performed. Next is a column for any notes taken. The last column, “Standard” has values that may list the type of desired evidence for each objective. These values include document, screen share, and artifact.

Introducing The CAICO

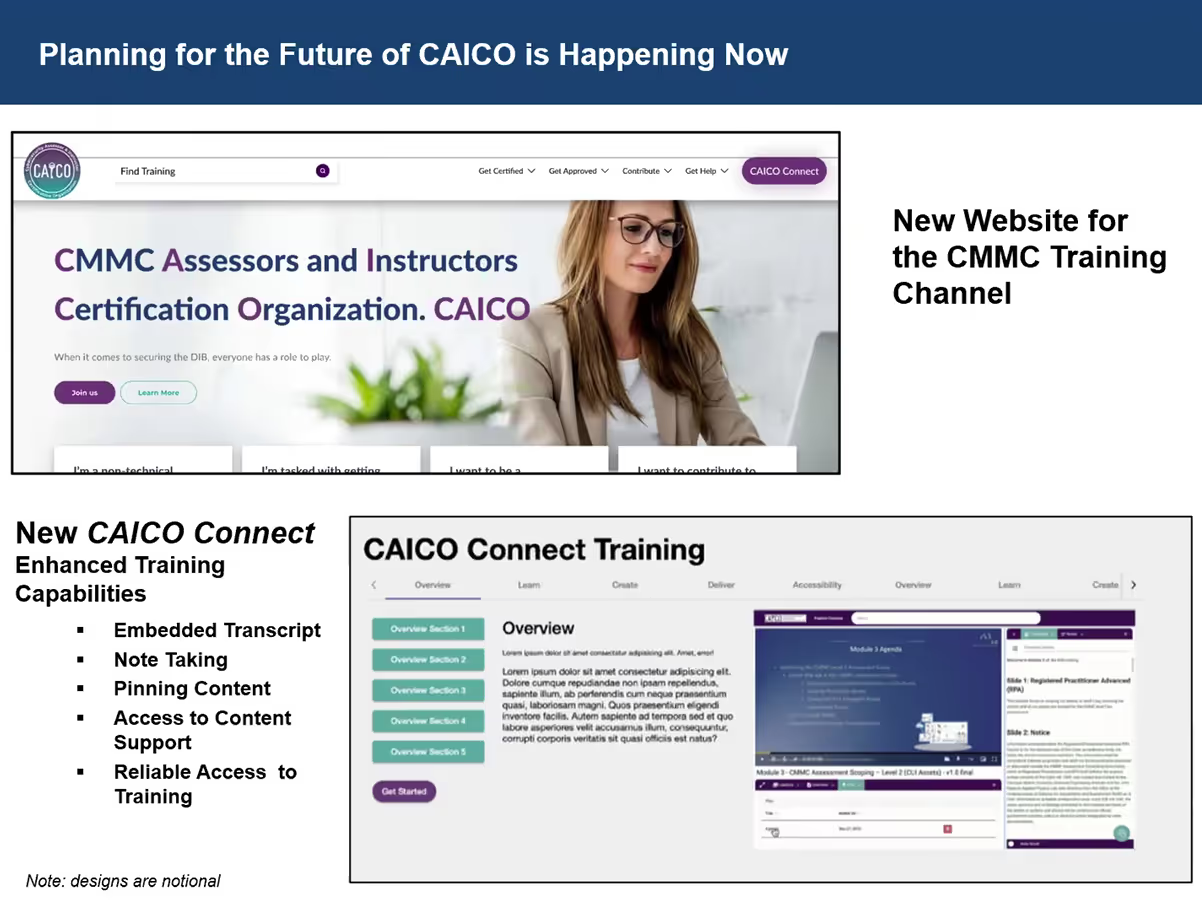

The Cyber AB introduced the Cybersecurity Assessor & Instructor Certification Organization (CAICO). The CAICO is a new entity in the CMMC ecosystem that will certify assessors and instructors. Kyle Gingrich will lead this entity as the interim Executive Director working for a Board of Managers. They plan to launch a website in Q1 of 2023 along with new branding, encrypted badges and new partner enablement tools.

They also plan on launching a CAICO Connect experience. The CAICO Connect will serve assessors and instructors, connecting them to the ecosystem. The new logo introduced utilizes a key with the silhouette of a person. Kyle explained that this represents “the individual is the key to everything we are doing within CMMC”.

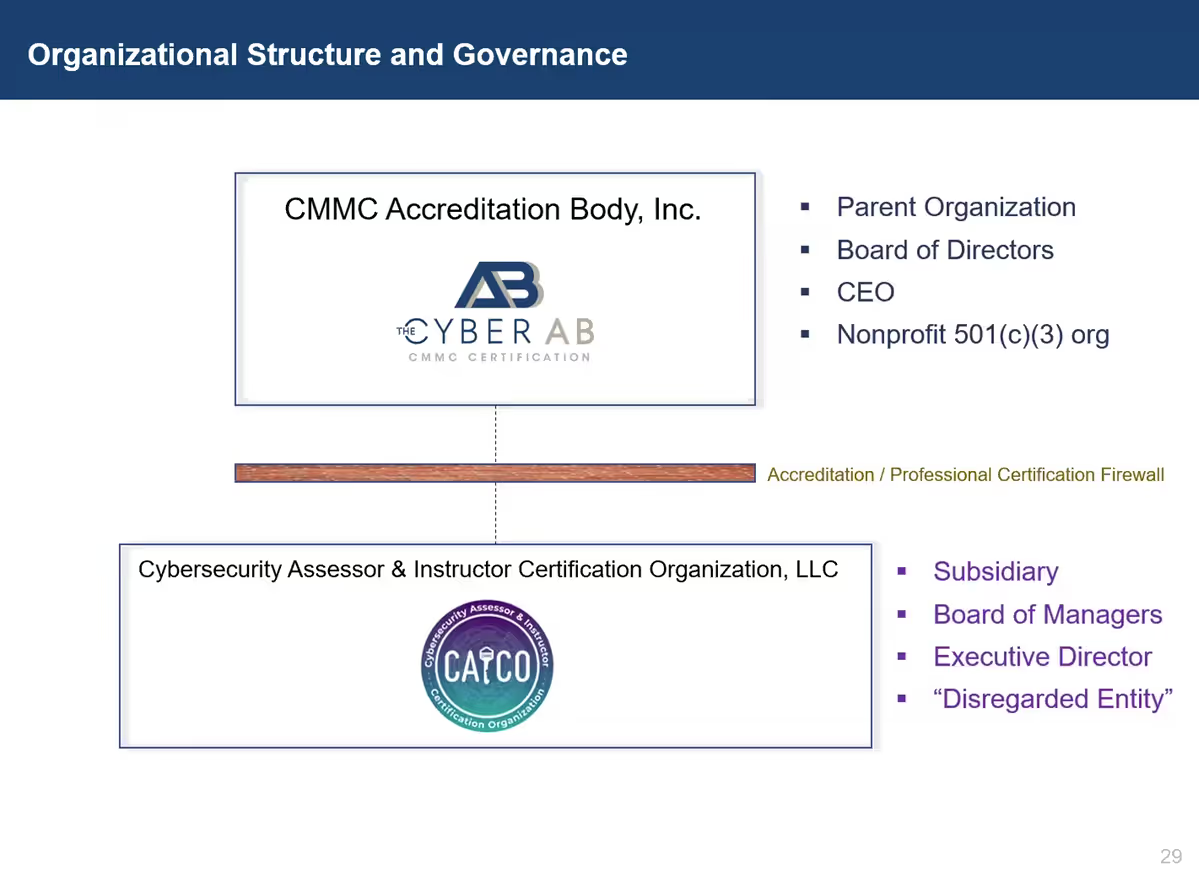

The Cyber AB is subject to International Organization for Standardization (ISO) standard 17011. Accrediting bodies must not provide any service that affects their impartiality. These services include certifying persons or provisioning skill testing. Linked accreditation bodies overseeing conformity assessment activities and certifying individuals should have:

- Different top management

- Different personnel performing the accreditation decision-making process

- Distinctly different names, logos, and symbols

- Effective mechanisms to prevent any influence on the outcome of any accreditation

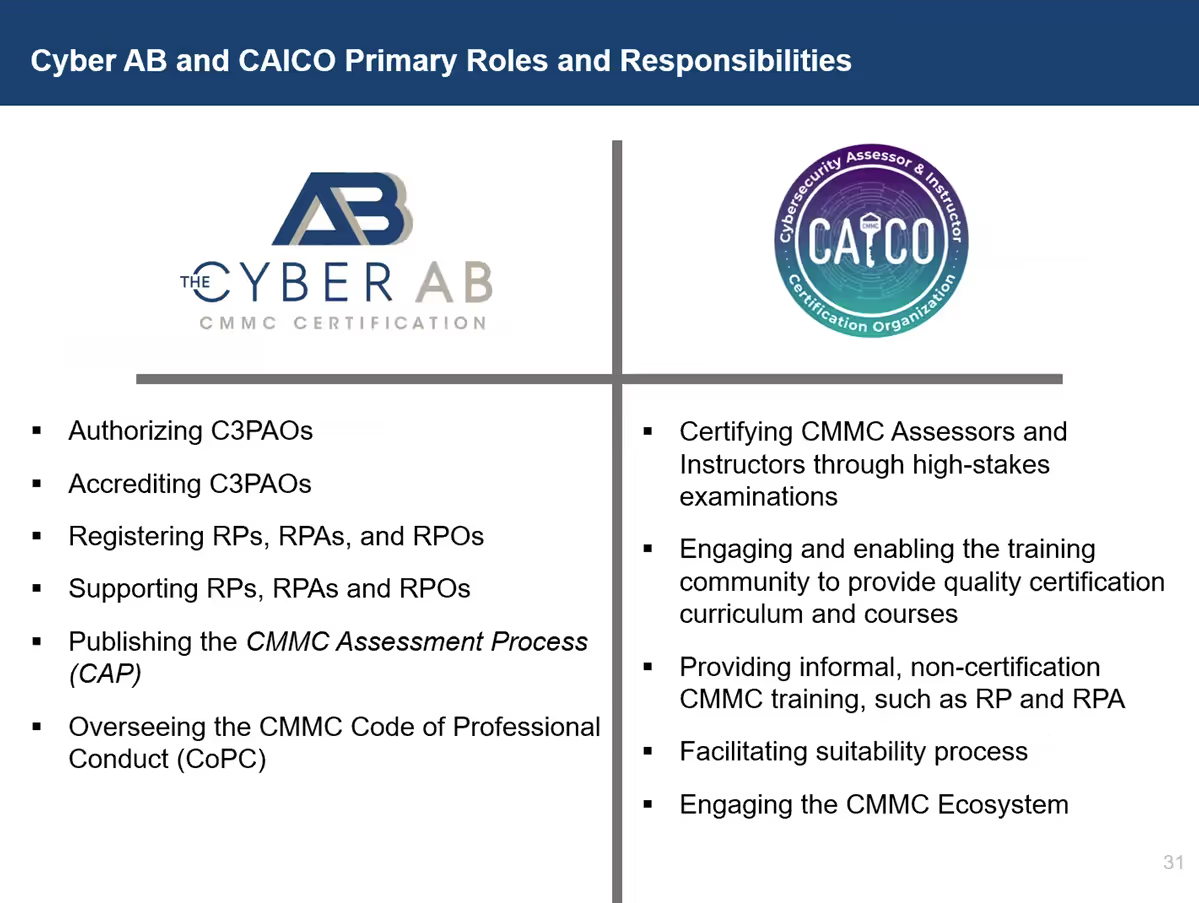

The CyberAB will continue to accredit C3PAOs and support consultants. They will also publish the CMMC Assessment Process (CAP) and oversee the CMMC Code of Professional Conduct (CoPC). The CAICO will take over responsibilities associated with certifying assessors and instructors. They will also provide non-certification CMMC training for consultants and conduct suitability processes.

Ecosystem Updates

There are now 26 authorized C3PAOs in the CMMC Marketplace.

The CyberAB website will soon list all feedback they’ve received on the CMMC Assessment Process (CAP).

The Certified Professional (CCP) Beta examination testing for provisional assessors has concluded. Statistical analysis of the data will determine the passing score. Provisional assessors and CCPs can register for the CCP exam launching on October 19th.

Questions and Answer

September’s Town Hall ended with a question and answer session featuring responses from Nick DelRosso. Nick addressed some key questions so we’re happy to recap them below:

Question: How is the Federal Information Processing Standard (FIPS 140-2) assessed?

Answer: FIPS-Validated Cryptography protects the confidentiality of information. This is applicable when transmitting information over a medium that you cannot control (e.g. internet). DIBCAC looks for the FIPS-validated certificate from NIST. There’s some flexibility allowed by the DoD. For example, temporary deficiencies are not penalized.

Question: How is the DIBCAC reconciling its procedures with those of the draft CAP?

CMMC is comparable to what we do with DFARS 252.204-7012. The assessment includes demonstrations and data from the contractor. There’s always going to be some variation and we make sure that we’re flexible. Different C3PAOs are going to conduct assessments with different methods. As long as we’re seeing the information that we need from the DIBCAC perspective, that’s what matters.

Question: Why are MSPs (Managed Service Providers) required to implement FedRAMP moderate controls?

Question 115 from the DoD Procurement Toolbox talks about the requirements for Cloud Service Providers (CSP). Sometimes an MSP provider acts as a CSP and vice versa. The requirements applicable to them depend on how they’re operating. If they act as a CSP, they are responsible for FedRAMP’s moderate or equal security requirements.

Question: Will voluntary assessments be available until the publication of the final rule?

At the current moment in time, DCMA views this program as a great way to cover more of the DIB. Situations may change in the future but right now it looks like it’s going to help DoD extend the purview of identifying risk.

Does a DIB company have an obligation to mark ITAR documents with CUI banner information?

For anything ITAR, you want to make sure that you follow all your export control regulations. I would recommend you contact either the program office or your ITAR program representatives.

Will you share lessons learned to help companies prepare for a certification assessment?

We want to share lessons learned with the community to help future assessments go smoother. We want to cooperate as much as we can with the DIB.

Question: Can you share any of the unofficial results from the Joint Surveillance program?

No, we don’t talk about specific companies, especially when we only have three. They may or may not want their names announced in the future.

Question: Will voluntary assessments receive DIBCAC high dual credits?

Provided it meets all the requirements of a DIBCAC high assessment, we enter the score into SPRS. There’s always a caveat that the DIBCAC score may differ from the C3PAO score but the score in SPRS is the one that the DIBCAC supports.

Question: Will Joint Surveillance assessments allow Plans of Action and Milestones?

Joint Surveillance assessments allow for plans of action and milestones. DIBCAC will follow up to verify the completion of your POA&Ms after the assessment.

Question: Isn’t there a risk that a C3PAO and DIBCAC can then reach different conclusions?

Even if procedures differ, we shouldn’t see a huge, crazy difference in procedures. At the end of the day, there may be different interpretations of the same requirement. If DIBCAC assessors see practice as other than unsatisfied, then they have an open and frank discussion. Different parties may see things differently, but I hope that would be the rare exception instead of the common case.

Emphasize your product's unique features or benefits to differentiate it from competitors

In nec dictum adipiscing pharetra enim etiam scelerisque dolor purus ipsum egestas cursus vulputate arcu egestas ut eu sed mollis consectetur mattis pharetra curabitur et maecenas in mattis fames consectetur ipsum quis risus mauris aliquam ornare nisl purus at ipsum nulla accumsan consectetur vestibulum suspendisse aliquam condimentum scelerisque lacinia pellentesque vestibulum condimentum turpis ligula pharetra dictum sapien facilisis sapien at sagittis et cursus congue.

- Pharetra curabitur et maecenas in mattis fames consectetur ipsum quis risus.

- Justo urna nisi auctor consequat consectetur dolor lectus blandit.

- Eget egestas volutpat lacinia vestibulum vitae mattis hendrerit.

- Ornare elit odio tellus orci bibendum dictum id sem congue enim amet diam.

Incorporate statistics or specific numbers to highlight the effectiveness or popularity of your offering

Convallis pellentesque ullamcorper sapien sed tristique fermentum proin amet quam tincidunt feugiat vitae neque quisque odio ut pellentesque ac mauris eget lectus. Pretium arcu turpis lacus sapien sit at eu sapien duis magna nunc nibh nam non ut nibh ultrices ultrices elementum egestas enim nisl sed cursus pellentesque sit dignissim enim euismod sit et convallis sed pelis viverra quam at nisl sit pharetra enim nisl nec vestibulum posuere in volutpat sed blandit neque risus.

Use time-sensitive language to encourage immediate action, such as "Limited Time Offer

Feugiat vitae neque quisque odio ut pellentesque ac mauris eget lectus. Pretium arcu turpis lacus sapien sit at eu sapien duis magna nunc nibh nam non ut nibh ultrices ultrices elementum egestas enim nisl sed cursus pellentesque sit dignissim enim euismod sit et convallis sed pelis viverra quam at nisl sit pharetra enim nisl nec vestibulum posuere in volutpat sed blandit neque risus.

- Pharetra curabitur et maecenas in mattis fames consectetur ipsum quis risus.

- Justo urna nisi auctor consequat consectetur dolor lectus blandit.

- Eget egestas volutpat lacinia vestibulum vitae mattis hendrerit.

- Ornare elit odio tellus orci bibendum dictum id sem congue enim amet diam.

Address customer pain points directly by showing how your product solves their problems

Feugiat vitae neque quisque odio ut pellentesque ac mauris eget lectus. Pretium arcu turpis lacus sapien sit at eu sapien duis magna nunc nibh nam non ut nibh ultrices ultrices elementum egestas enim nisl sed cursus pellentesque sit dignissim enim euismod sit et convallis sed pelis viverra quam at nisl sit pharetra enim nisl nec vestibulum posuere in volutpat sed blandit neque risus.

Vel etiam vel amet aenean eget in habitasse nunc duis tellus sem turpis risus aliquam ac volutpat tellus eu faucibus ullamcorper.

Tailor titles to your ideal customer segment using phrases like "Designed for Busy Professionals

Sed pretium id nibh id sit felis vitae volutpat volutpat adipiscing at sodales neque lectus mi phasellus commodo at elit suspendisse ornare faucibus lectus purus viverra in nec aliquet commodo et sed sed nisi tempor mi pellentesque arcu viverra pretium duis enim vulputate dignissim etiam ultrices vitae neque urna proin nibh diam turpis augue lacus.